The Model Context Protocol (MCP) is an open-source protocol developed by Anthropic that standardises how AI applications connect with external data sources and tools. It essentially functions as the “USB-C for AI apps”.

Large Language Models (LLMs) like Claude, ChatGPT, Gemini, and LlaMA have revolutionised how we interact with information and technology. However, they face a fundamental limitation: isolation from real-world data and systems. This creates what’s known as the “N×M problem,” where N represents the number of LLMs and M represents the number of tools or data sources that need integration.

MCP addresses this challenge by providing a universal, open standard for connecting AI systems with data sources, replacing fragmented integrations with a single protocol. Just as USB-C offers a standardised way to connect devices to various peripherals, MCP provides a standardised way to connect AI models to different data sources and tools.

Understanding the Core of MCP

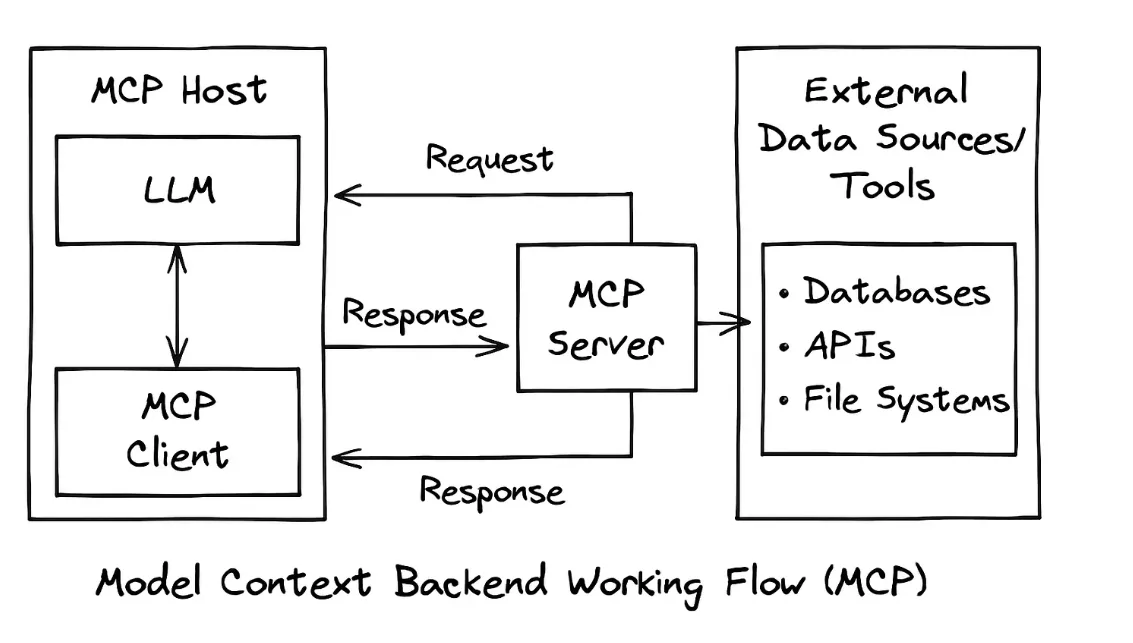

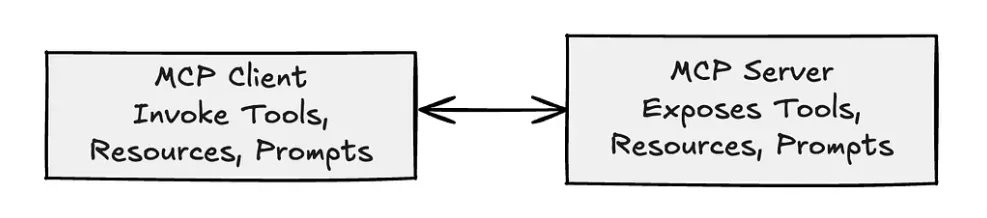

MCP is an open protocol that standardises how applications provide context to LLMs. It enables developers to build secure, two-way connections between their data sources and AI-powered tools through a straightforward architecture. Developers can either expose their data through MCP servers or AI applications (MCP clients) that connect to these servers.

MCP Host: Programs like Claude Desktop, Cursor IDE, or AI tools that want to access data through MCP. They contain the orchestration logic and can connect each client to a server.

MCP Client: Protocol clients that maintain 1:1 connections with servers. They exist within the MCP host and convert user requests into a structured format that the open protocol can process.

MCP Servers: Lightweight programs that each expose specific capabilities through the standardised Model Context Protocol. They act as wrappers for external systems, tools, and data sources.

How does MCP standardise communication between AI applications and external systems?

MCP uses JSON-RPC 2.0 as the underlying message standard, providing a standardised structure for requests, responses, and notifications. This ensures consistent communication across different AI systems and external tools.

The Mechanics of MCP: How it Works Under the Hood

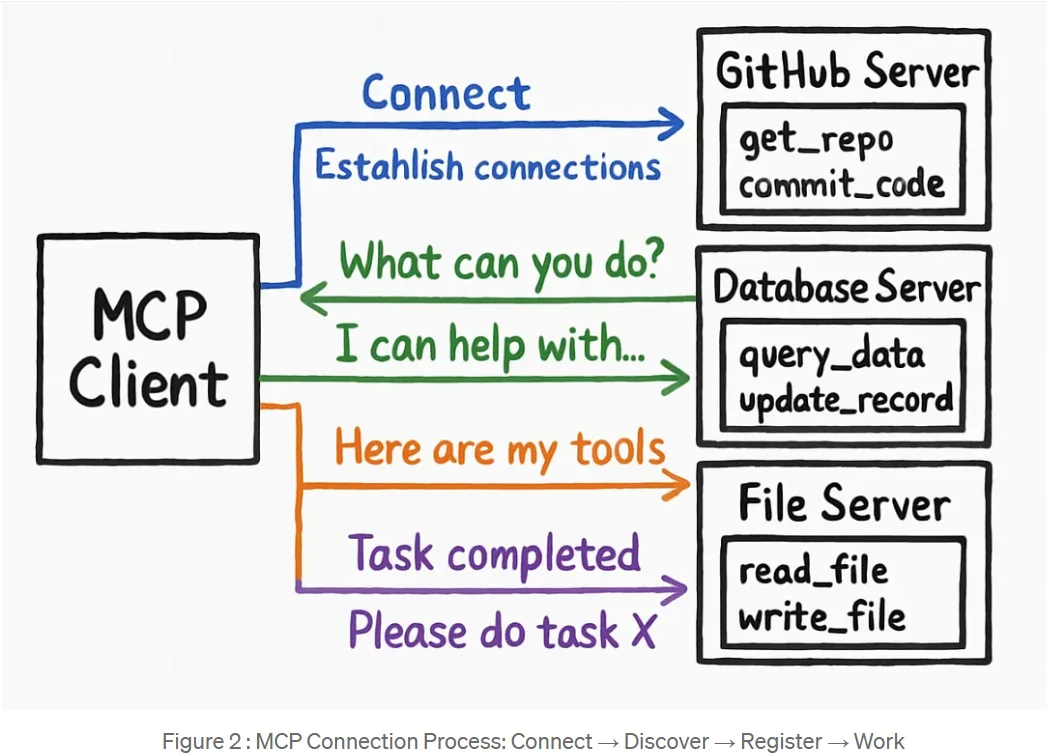

When an MCP client starts up, it’s like walking into a new office building and meeting your team for the first time. You need to introduce yourself, learn what everyone can do, and then start collaborating.

The “Connect” Phase: The MCP client reaches out to all configured servers simultaneously. In the given diagram, the client connects to three specialised servers — a GitHub Server, Database Server, and File Server. This connection happens through various methods like STDIO pipes or HTTP (server-sent events), but the key point is that all connections are established upfront.

The “Discovery” Phase: Now comes the getting-to-know-you conversation. The client essentially asks each server, “What can you do?” This isn’t a one-way conversation — it’s more like a friendly chat where each server responds with “I can help with…” followed by their specialities, which means that they expose all of their tools to the client. The GitHub Server might say it can help with repositories and code, while the Database Server offers data queries and updates.

The “Registration” Phase: This is where things get practical. Each server provides its detailed “business card” by sharing its complete toolkit. As shown in the diagram, the GitHub Server lists tools like get_repo and commit_codeThe Database Server offers query_data and update_recordThe File Server provides read_file and write_file. The client carefully catalogues all these capabilities for future use.

The “Working” Phase: Finally, it’s time to get things done! The client can now make specific requests like “Please do task X” to any server and receive “Task completed” responses. The client seamlessly orchestrates work across multiple specialised servers, creating powerful AI workflows that leverage each server’s unique strengths.

This entire handshake process transforms a simple client into a powerful orchestrator that can tap into diverse capabilities whenever needed, making MCP the perfect protocol for building sophisticated AI applications.

The Three Primary Interfaces of MCP Servers

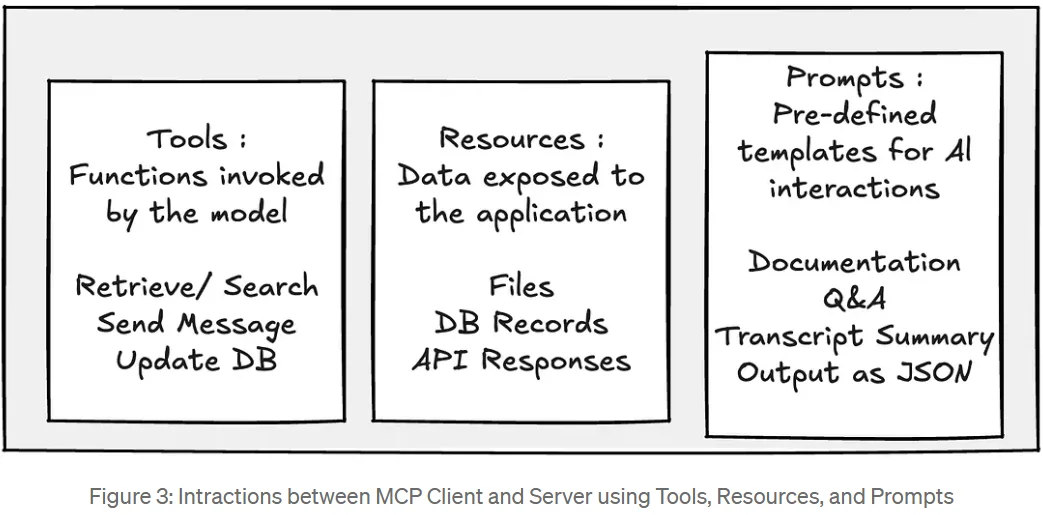

MCP servers expose functionality through three primary interfaces:

- Tools: These are model-controlled functions that the AI can invoke when needed. They are essentially functions that can implement anything from reading data to writing data to other systems. Examples include

Get weather,Get forecast, orGet alerts. The AI system (like Cursor or Cloud Desktop) decides when to use these tools based on the context. - Resources: These are application-controlled data exposed to the AI system. Resources can be static, such as PDF documents, text files, images, or JSON files. They can also be dynamic, where the server provides instructions on how to dynamically retrieve the data.

- Prompts: These are user-controlled templates for common interactions. They are predefined templates that users can invoke, helping to standardise complex interactions and ensure consistent outputs. Examples include templates for documentation Q&A, transcript summarisation, or outputting data as JSON.

Building and Running Model Context Protocol (MCP) Servers: Simplified Deployment

The Model Context Protocol (MCP) empowers AI models to interact with real-world tools and data. MCP servers are the bridge, exposing specific functions and information to AI clients in a standardised way. Understanding how to build and deploy these servers is key to unlocking AI’s full potential.

Creating Your MCP Server

Developers have several straightforward options for creating MCP servers:

Manual Coding: For precise control, you can write your server from scratch using languages like Python or Node.js, defining its tools, data access, and prompts.

Community Solutions: Leverage a vast library of open-source MCP servers. This allows for quick setup and modification, saving development time by using existing, proven solutions.

Official Integrations: Many companies, like Cloudflare and Stripe, now offer official MCP servers for their services. Always check for these first, as they provide high-quality, well-maintained integrations, ensuring seamless and reliable connections.

The Role of the Transport Layer: STDIO vs. HTTP+SSE (Server-Sent Events)

MCP supports two primary transport methods:

- STDIO (Standard Input/Output): Used for local integrations where the server runs in the same environment as the client

- HTTP+SSE (Server-Sent Events): Used for remote connections, with HTTP for client requests and SSE for server responses and streaming

STDIO Transport: Direct Local Communication

STDIO (Standard Input/Output) transport creates a direct communication pipeline between the MCP client and server running on the same machine. The client launches the server as a child process and connects through stdin/stdout streams, enabling ultra-fast message exchange without network overhead. This approach is perfect for local AI assistants, development environments, and desktop applications where maximum performance and security are priorities, since there’s no network exposure and communication happens through native OS mechanisms.

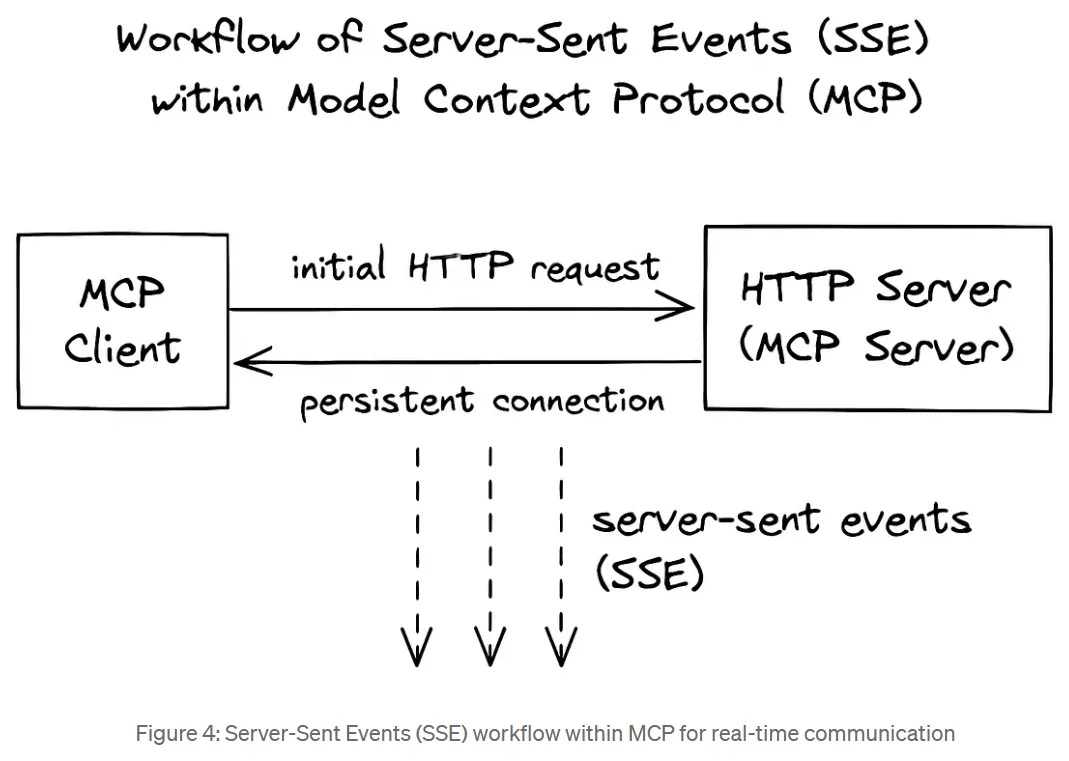

HTTP+SSE Transport: Real-Time Remote Connectivity

HTTP with Server-Sent Events (SSE) enables MCP clients to connect to remote servers while maintaining real-time communication capabilities. The client sends requests via standard HTTP, while the server pushes live updates, progress notifications, and streaming responses back through an SSE connection. This transport method is ideal for web applications, cloud deployments, and scenarios requiring scalability, as it allows multiple clients to connect to a single server while providing instant server-to-client communication for dynamic AI workflows and collaborative features.

Deploying Your MCP Server: Focus on Docker

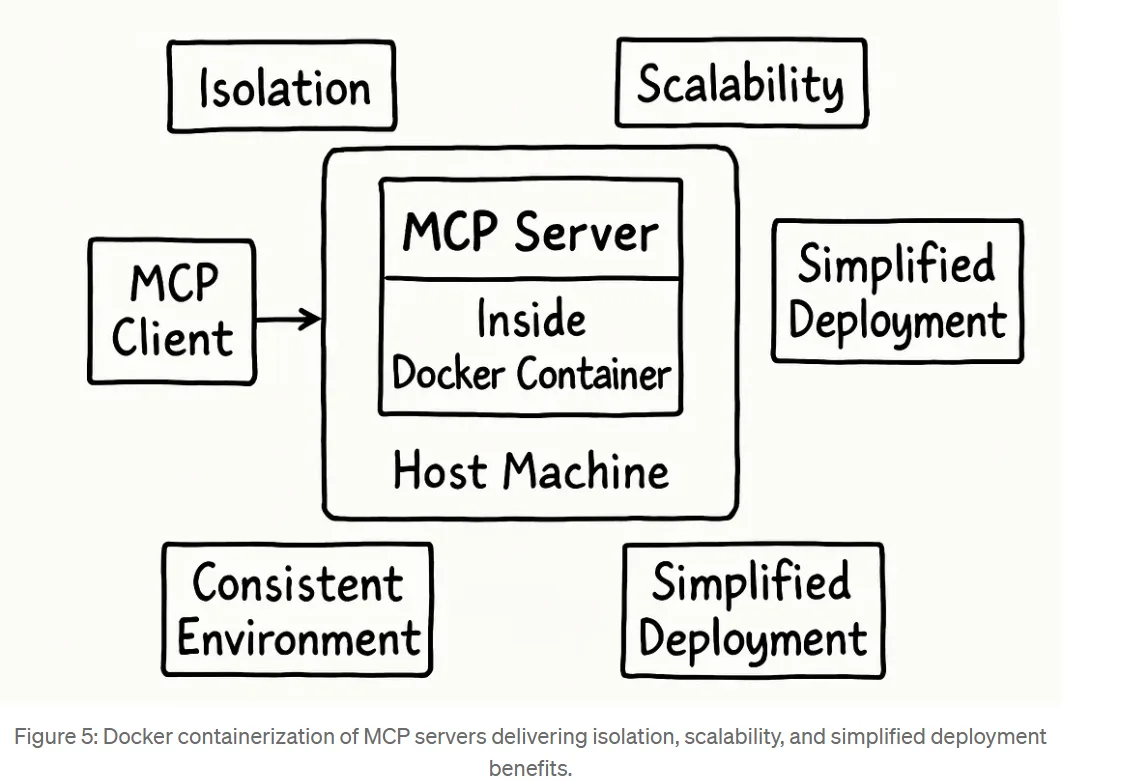

Once built, an MCP server needs to be deployed. While local (STDIO) and remote (HTTP/SSH) methods exist, Docker containers are the most robust and widely adopted deployment strategy for modern applications, including MCP servers.

Why Dockerized Deployment is Superior:

Docker packages your MCP server and all its dependencies into a single, isolated unit. This approach offers significant advantages:

- Enhanced Security: Each server runs in its isolated environment, preventing conflicts and limiting potential security breaches. This sandboxing protects your system from vulnerabilities within the server.

- Guaranteed Consistency: A Docker image ensures your server behaves identically across any environment, from your development machine to a production cloud. This eliminates compatibility issues and streamlines testing and deployment.

- Effortless Scalability: Docker containers are lightweight and start quickly, making it easy to scale your MCP services up or down as demand changes. Tools like Kubernetes can automate this scaling, ensuring high availability.

- Simplified Management: All server dependencies are bundled within the Docker image, simplifying setup and avoiding conflicts. Versioning and easy rollbacks also provide a safety net for updates.

Docker’s widespread adoption and inherent benefits make it the ideal choice for deploying secure, portable, and scalable MCP servers, ensuring your AI integrations are robust and reliable.

The Future is Now: Real World MCP Examples

The Model Context Protocol isn’t just theoretical; it’s paving the way for truly intelligent and integrated AI applications. Here are three futuristic, real-world examples of how MCP could revolutionise various sectors:

- Personalised Healthcare AI: Imagine an MCP-powered AI assistant that securely connects to your electronic health records (EHR), wearable device data, and real-time medical research databases. This AI could proactively analyse your health trends, suggest personalised preventative measures, alert your doctor to anomalies, and even recommend tailored treatment plans based on the latest clinical trials, all while maintaining strict data privacy through MCP’s secure communication.

- Autonomous Smart City Management: An MCP-enabled central AI. The system could integrate with diverse city infrastructure: traffic sensors, public transport networks, energy grids, and waste management systems. This AI could dynamically optimise traffic flow, predict and prevent energy overloads, manage public safety responses, and even coordinate autonomous delivery services, all by invoking specific tools and accessing real-time resources exposed by various city departments via MCP servers.

- Hyper-Efficient Supply Chain Orchestration: Envision an AI orchestrator that uses MCP to connect with global logistics providers, manufacturing plants, inventory systems, and real-time market demand data. This AI could predict supply chain disruptions, automatically re-route shipments, optimise production schedules, and even negotiate new contracts with suppliers, ensuring seamless and resilient global trade by leveraging a network of interconnected MCP servers.

Concluding Thoughts

The Model Context Protocol is more than just a technical specification; it’s a foundational step towards a future where AI is seamlessly integrated into every facet of our digital and physical lives. By providing a standardised, secure, and scalable way for AI models to interact with the world’s data and tools, MCP unlocks unprecedented possibilities for innovation, efficiency, and intelligent automation. The journey towards truly autonomous and context-aware AI agents is well underway, and MCP is a critical enabler of this transformative era.